SuperUber

Multimedia interactive experiences that combine design, art, technology, and architecture.

An interactive and educational experience for children at Animasom in RioSul (Rio de Janeiro, Brazil), where kids explore two environments, the Amazon and the Beach. Designed to promote sustainable interaction with nature.

In the Amazon, children engage with endangered species and playful activities like waking sloths, changing the river’s course, and chasing ants, all while traveling through a dynamic river journey. On the Beach, they help clean up litter and earn fun rewards like beach balls or watching baby turtles hatch and head to the ocean.

-

Acted as Lead Developer, responsible for the majority of the codebase, including custom shaders, visual effects, projection mapping and body tracking.

-

Development of codebase, engine systems, custom shaders and visual effects.

Engineered a reactive water simulation using Unity’s experimental ECS and DOTS physics, enabling the water to respond dynamically to user movement.

Built a real-time body tracking system using Intel RealSense depth cameras.

Customized a Unity plugin for projection mapping across four projectors (two walls, two floors), all rendered from a single high-performance PC.

Optimized the entire system for performance and stability in a real-time installation environment.

Co-led the technical direction, managed the physical setup, and collaborated closely with the client to align the technical vision with creative goals.

-

User tracking with depth cameras: Tracking silhouettes (especially of small children) was difficult due to their body size and movement. I developed a robust, real-time solution using Intel RealSense and custom logic to ensure smooth interaction for all visitors.

Performance under high load: Running four high-resolution projections from a single PC required heavy optimization. I streamlined rendering, memory usage, and CPU/GPU performance to meet real-time constraints.

Projection mapping precision: Off-the-shelf tools didn’t offer the level of control needed. I modified a Unity plugin to improve mapping accuracy and synchronization.

Client expectations: I worked directly with the client throughout development to propose solutions, adapt to feedback, and ensure the final delivery met both creative and technical ambitions.

-

Languages: C#

Libraries/Frameworks:

OpenCV (for computer vision)

Unity ECS

Unity DOTS

Hardware:

Intel RealSense depth cameras

High-lumen projectors

PC

Software Tools:

Unity Engine

RealSense SDK and Unity integration

-

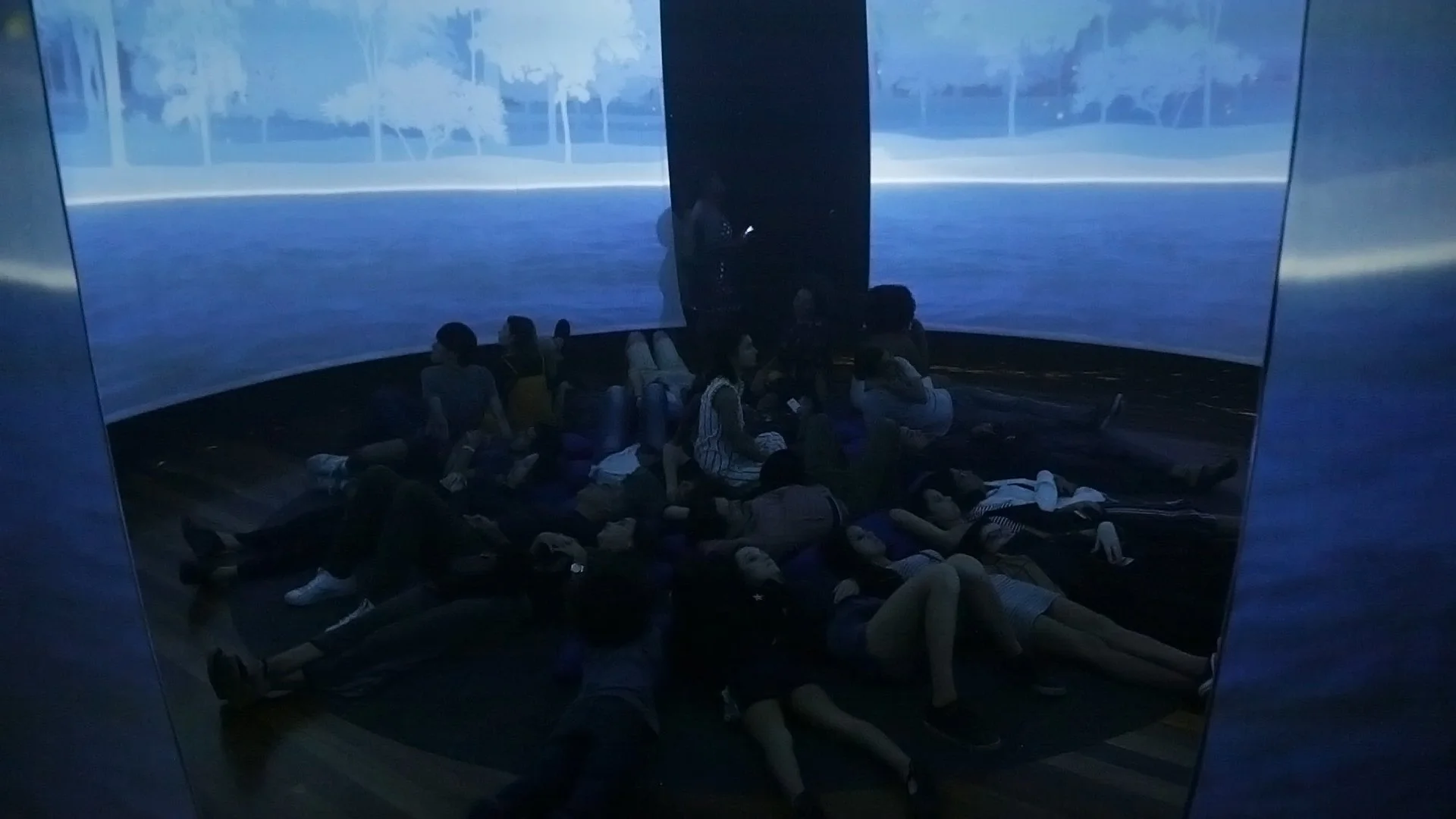

FLUXO is an immersive art installation at the Museu de Arte do Rio that explores the continuous flow of nature, time, and human movement. Visitors walk through a large interactive space where their presence generates dynamic particle trails projected onto the floor, leading to a central dome surrounded by visuals of skies, waters, and ancestral patterns. Accompanied by natural sounds and Afro-Brazilian rhythms, the experience blends art, technology, and sensory immersion to reflect the fluid interplay between the city of Rio de Janeiro and its natural environment.

-

I worked as the solo developer responsible for the interactive system.

My main contributions included:Software development of the interactive floor, where real-time particle systems responded to visitors' movements.

Computer vision tracking using Intel RealSense cameras to detect visitors, including children.

Hardware integration across 5 synchronized PCs, each managing projectors and blending visuals in real time.

Design of user interaction, ensuring that the experience was fluid, poetic, and intuitive.

Assistance with projection mapping on the central 360° dome that displayed dynamic video responding to interactions on the floor.

-

Software development of the interactive floor, where real-time particle systems responded to visitors' movements.

Computer vision tracking using Intel RealSense cameras to detect visitors, including children.

Hardware integration across 5 synchronized PCs, each managing projectors and blending visuals in real time.

Design of user interaction, ensuring that the experience was fluid, poetic, and intuitive.

Assistance with projection mapping on the central 360° dome that displayed a video that also changed the floor behaviour.

-

Large-scale projection mapping: Mapped a ~20m² room floor with multiple projectors, requiring precise alignment and blending.

Synchronization: Five computers needed to stay perfectly in sync to render interactive particles and respond to a shared timeline of a central video piece.

Robust tracking: Implemented computer vision systems capable of tracking multiple people simultaneously, in varying lighting conditions and including children with smaller body signatures.

Network performance: Built a local network architecture optimized for real-time data exchange between computers, cameras, and projectors to ensure visual and temporal coherence.

-

Languages: C#, C++

Libraries/Frameworks: OpenCV (for computer vision)

Hardware:

Intel RealSense depth cameras

High-lumen projectors

Multi-PC setup with local networking

Software Tools:

Unity Engine

RealSense SDK and Unity integration

-